API extensions

We added support for API extensions (1617).

You can register your custom accessors to `DataFrame`, `Seires`, and `Index`.

For example, in your library code:

py

from databricks.koalas.extensions import register_dataframe_accessor

register_dataframe_accessor("geo")

class GeoAccessor:

def __init__(self, koalas_obj):

self._obj = koalas_obj

other constructor logic

property

def center(self):

return the geographic center point of this DataFrame

lat = self._obj.latitude

lon = self._obj.longitude

return (float(lon.mean()), float(lat.mean()))

def plot(self):

plot this array's data on a map

pass

...

Then, in a session:

py

>>> from my_ext_lib import GeoAccessor

>>> kdf = ks.DataFrame({"longitude": np.linspace(0,10),

... "latitude": np.linspace(0, 20)})

>>> kdf.geo.center

(5.0, 10.0)

>>> kdf.geo.plot()

...

See also: https://koalas.readthedocs.io/en/latest/reference/extensions.html

Plotting backend

We introduced `plotting.backend` configuration (1639).

Plotly (>=4.8) or other libraries that pandas supports can be used as a plotting backend if they are installed in the environment.

py

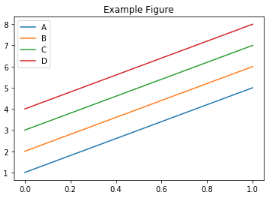

>>> kdf = ks.DataFrame([[1, 2, 3, 4], [5, 6, 7, 8]], columns=["A", "B", "C", "D"])

>>> kdf.plot(title="Example Figure") defaults to backend="matplotlib"

python

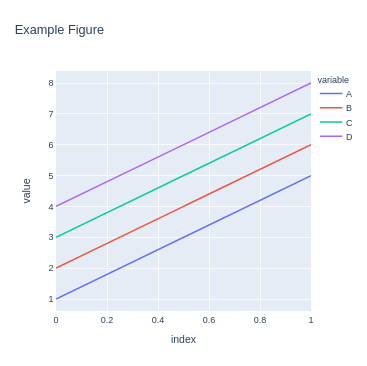

>>> fig = kdf.plot(backend="plotly", title="Example Figure", height=500, width=500)

>>> same as:

>>> ks.options.plotting.backend = "plotly"

>>> fig = kdf.plot(title="Example Figure", height=500, width=500)

>>> fig.show()

Each backend returns the figure in their own format, allowing for further editing or customization if required.

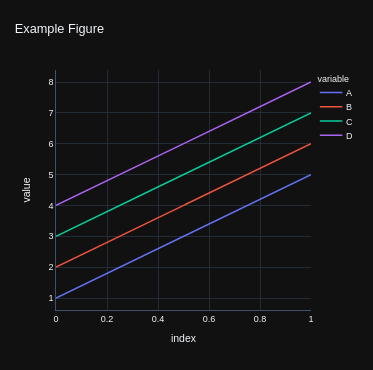

python

>>> fig.update_layout(template="plotly_dark")

>>> fig.show()

Koalas accessor

We introduced `koalas` accessor and some methods specific to Koalas (1613, 1628).

`DataFrame.apply_batch`, `DataFrame.transform_batch`, and `Series.transform_batch` are deprecated and moved to `koalas` accessor.

py

>>> kdf = ks.DataFrame({'a': [1,2,3], 'b':[4,5,6]})

>>> def pandas_plus(pdf):

... return pdf + 1 should always return the same length as input.

...

>>> kdf.koalas.transform_batch(pandas_plus)

a b

0 2 5

1 3 6

2 4 7

py

>>> kdf = ks.DataFrame({'a': [1,2,3], 'b':[4,5,6]})

>>> def pandas_filter(pdf):

... return pdf[pdf.a > 1] allow arbitrary length

...

>>> kdf.koalas.apply_batch(pandas_filter)

a b

1 2 5

2 3 6

or

py

>>> kdf = ks.DataFrame({'a': [1,2,3], 'b':[4,5,6]})

>>> def pandas_plus(pser):

... return pser + 1 should always return the same length as input.

...

>>> kdf.a.koalas.transform_batch(pandas_plus)

0 2

1 3

2 4

Name: a, dtype: int64

See also: https://koalas.readthedocs.io/en/latest/user_guide/transform_apply.html

Other new features and improvements

We added the following new features:

DataFrame:

- `tail` (1632)

- `droplevel` (1622)

Series:

- `iteritems` (1603)

- `items` (1603)

- `tail` (1632)

- `droplevel` (1630)

Other improvements

- Simplify Series.to_frame. (1624)

- Make Window functions create a new DataFrame. (1623)

- Fix Series._with_new_scol to use alias. (1634)

- Refine concat to handle the same anchor DataFrames properly. (1627)

- Add sort parameter to concat. (1636)

- Enable to assign list. (1644)

- Use SPARK_INDEX_NAME_FORMAT in combine_frames to avoid ambiguity. (1650)

- Rename spark columns only when index=False. (1649)

- read_csv: Implement reading of number of rows (1656)

- Fixed ks.Index.to_series() to work properly with name paramter (1643)

- Fix fillna to handle "ffill" and "bfill" properly. (1654)